It’s vital when you’re selecting an integration platform that you understand your current IT landscape, your forward IT strategy and the larger scope of what needs to be achieved.

When assessing platforms for their effectiveness and impact you must take into account your existing system landscape, integration approaches, developer skillsets, security, monitoring, advanced services such as IoT or stream analytics as well as wider operational and support structures. A 'perfect' solution in isolation that would require large scale changes in your workforce or that does not complement existing and future systems are probably not fit for purpose.

The cost of an integration platform is a lot more than just the cost of the licences. You must also consider the cost of the skilled people required to develop, maintain and support the integrations. Some products emphasise lightweight development approaches, however, it requires strong technical expertise to produce good results.

What do we want our architecture to look like?

Ensuring you have a clearly-defined architecture is crucial, especially when it comes to integration. A well-defined architecture enables us to select the appropriate tools for fast feature delivery and has a significant impact on the success of your future integrations.

It can also dictate the performance, maintainability, and future-proofing of your platform.

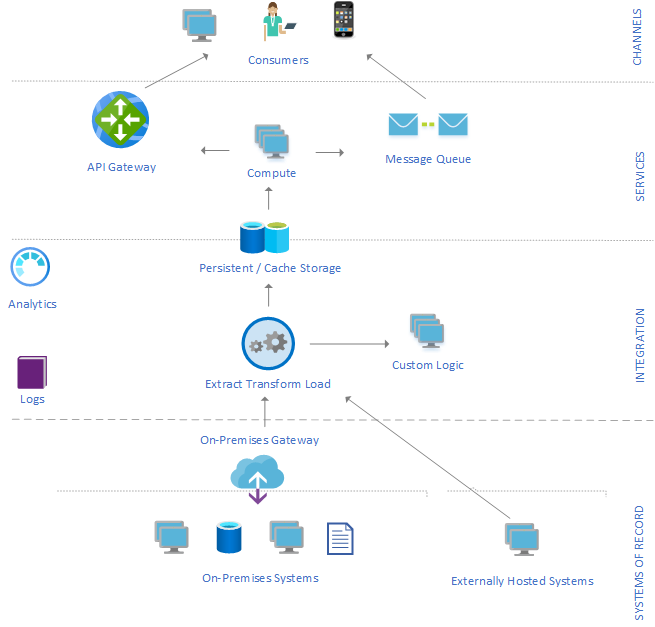

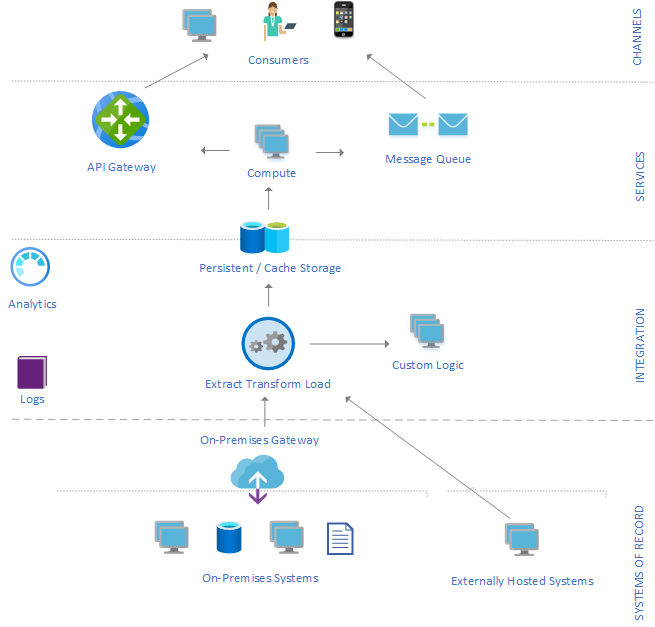

Best practice architecture for an integration platform should encompass 4 distinct layers: systems of record (SoR), integrations, services and channels.

Systems of record

No doubt within your business you will have a number of core systems that you want to be able to integrate. These are the longstanding, foundational systems that your organisation may have relied upon for many years. Only by liberating the data from these systems, whether on-premises or hosted by a 3rd party, will you be able to take full advantage of the data within your business and use it in ways that were previously impossible.

These systems of record and the data within them are often business-critical and integral to the success of your organisation. Therefore, you’ll want to treat them with care and be able to standardise and control the development and deployment of these integrations wherever possible.

Does your platform of choice allow for best practices such as continuous integration/continuous delivery, Infrastructure as Code (IaC), version control and deployment pipelines? Does it integrate with your existing tooling? Is an infrastructure as code approach available to aid environment creation?

These questions are important as with standardisation and automation, comes repeatable and predictable development. You want to avoid being bogged down in custom development for each integration or having to learn a new toolset from scratch before you can begin new integrations.

Ultimately, you want to be able to seamlessly talk to your systems of record, extracting a range of data from these systems to be ingested into your integration platform and liberated for use by other applications and services.

Integration platform

Once you’ve identified the relevant data from the systems of record, we’re into the integration layer, the platform itself and the ETL engine (extract, transform, load) which is at the centre of any integration platform. The ETL engine is responsible for ingesting, cleansing, blending and transforming data into standardised or controlled formats and structures, ready to be surfaced, queried or analysed.

Matching, merging and changing data is critical to the success of your integration platform. Your ETL engine should provide these, along with other transformation abilities, which allow modifications to the data. Tools like Data Factory and Logic Apps from Azure, make this available with simple drag and drop functionality. You should also look for tools that provide you with logs and analytics, to monitor and optimise your ETL processes.

Whatever the makeup of your ETL engine, whether it’s Azure components or an in-built engine from an iPaaS platform, it should enable you to enforce data quality and data governance standards. Therefore datasets from individual systems can be used together, and prepare the data to be leveraged by the service level or surfaced to users at the channel level.

Services

With your data transformed, you are ready to make that data available to the service layer. This layer is essentially responsible for exposing the data to your various user-facing channels. It is the surface area of your integration platform where managed access to data can take place.

Data published from here should be standardised and viewed through the lens of your organisational operations (not based on SoR constraints and peculiarities). You may also wish to segment your data for granular access from integration platform consumers. This will be led by a well-formed and enforced data architecture and governance strategy.

The services layer should provide you with the ability to expose the data as RESTful APIs, for controlled access to transformed datasets, and through a service bus, for exposing more ‘hot’ or event/change-feed based data.

To be a truly integrated system the platform should connect to your pre-existing organisational identity management (such as Azure Active Directory). This helps facilitate access control, increase security and data governance while, at the same time, ensuring consistency with the rest of your IT landscape.

With your service layer configured, you are able to expose your data to your channels and use it to improve the experiences you provide to your users.

Channels

How do your users engage with you? Through web-based systems? A mobile app? A physical interaction?

Analysis of the expected user channels will inform your decisions about the earlier layers of your architecture. Specific use cases may require customised or aggregated APIs at the top level of a multi-tier architecture and/or have unique requirements around the user experience.

Therefore your platform should allow for a “layering strategy”, with representation and aggregation of multiple SoR data sets and unique APIs for different applications, while minimising duplication. Low-level system APIs can present data more closely resembling that of the underlying SoR, mid-level APIs provide filtered views and capabilities on top of the low-level APIs.

Consumer or top-level APIs then provide the final level of aggregation and tailoring of data to specific use-cases providing a seamless experience for your end-user.

To find out more about platform selection strategy and get a full considerations check-list, download our free eBook the CIO's Guide to Enterprise Integration now.